Apple Makes You a One-Day “Millionaire”

On April 11, 1976, Apple took a significant step with the debut of the Apple I computer, designed by Steve Wozniak. Although it was just a circuit board that required users to provide their own keyboard and monitor, its historical significance is profound as this product cemented Apple’s position in the market.

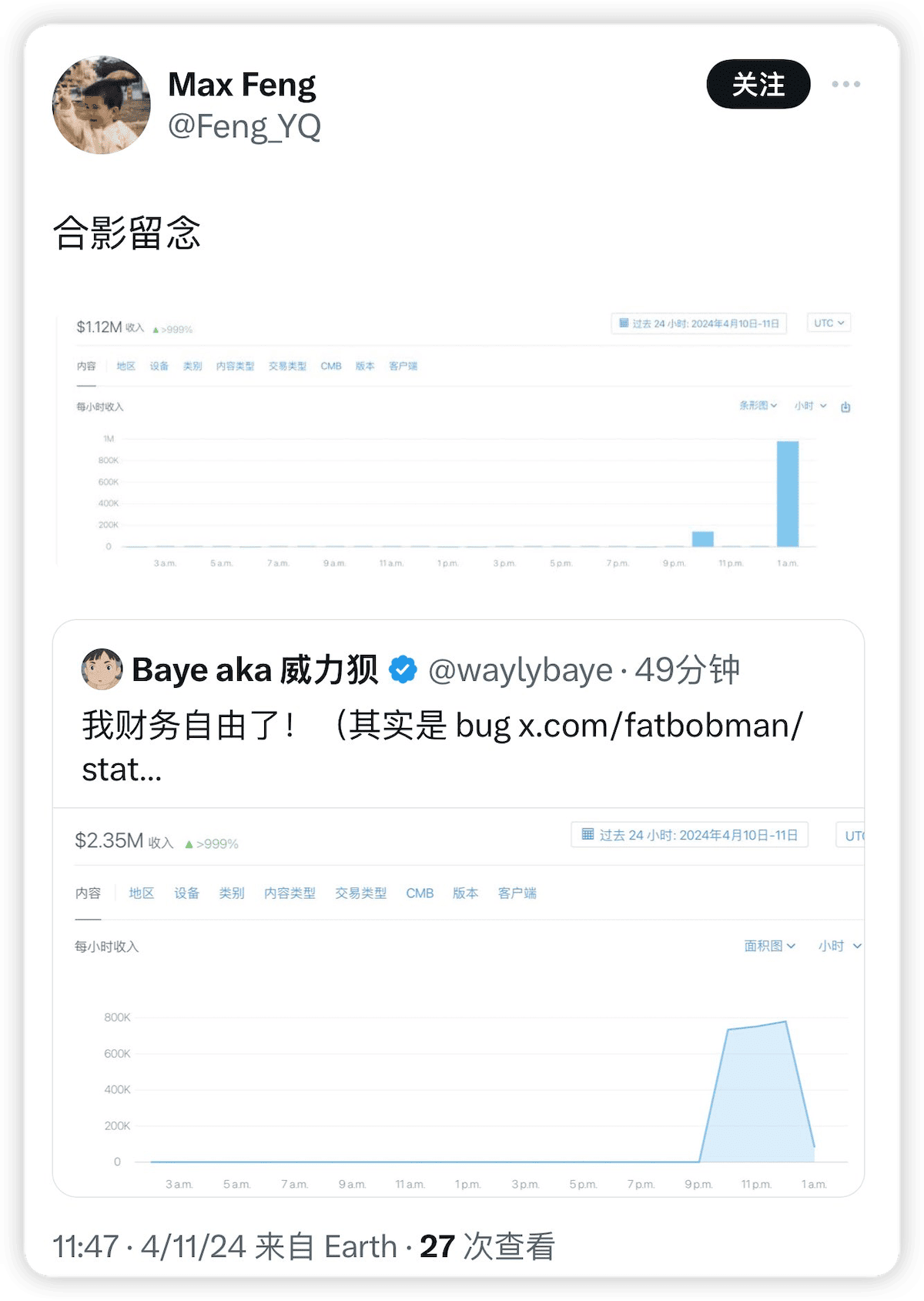

Worth mentioning is an amusing incident that occurred a few days ago (April 11): a bug in Apple’s App Store management website for developers led to many developers seeing their sales figures soar to millions of dollars within a short time. Although these figures were quickly corrected, this episode provided a light-hearted topic for the developer community and allowed some developers to briefly “taste” what it’s like to be a millionaire.

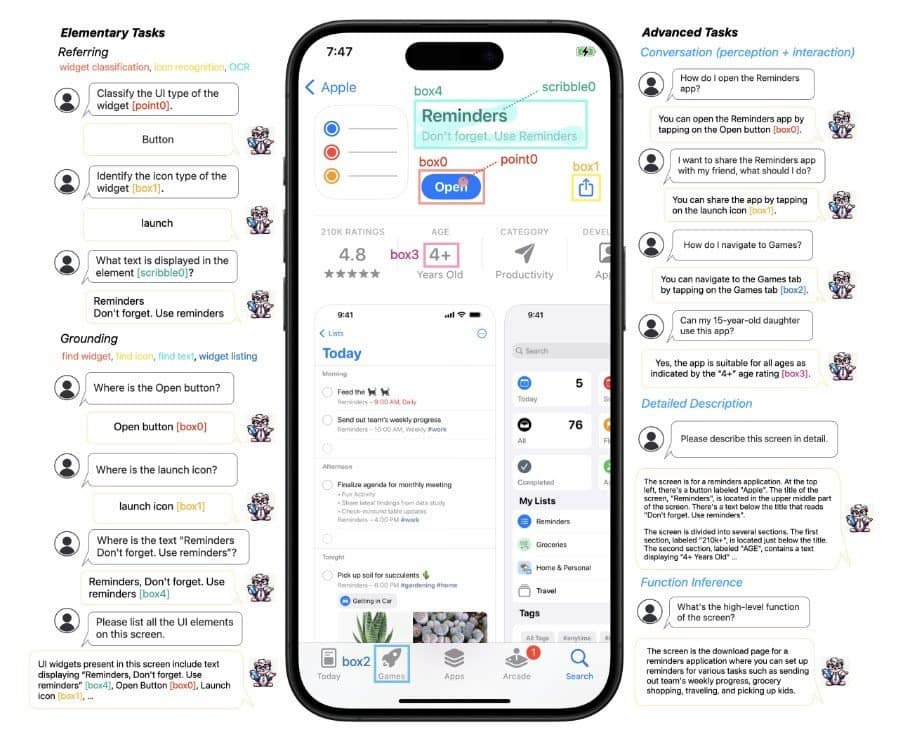

Moreover, attracting more attention is a recent paper published by Apple’s researchers titled Ferret-UI: Grounded Mobile UI Understanding with Multimodal LLMs. This paper introduces a new type of AI language model, “Ferret-UI,” specifically designed to enhance the interactive experience of mobile device interfaces, such as those on iPhones and Androids, capable of performing complex reference and interaction tasks across various input forms.

The paper demonstrates that Ferret-UI excels in both basic and advanced UI tasks, surpassing other multimodal large language models and GPT-4V, further solidifying Apple’s leadership in user experience and accessibility.

With the upcoming WWDC, Apple is expected to showcase more innovations in local AI and large language models. This paper gives us a glimpse into Apple’s capabilities and the future direction in this field.

Originals

The @State Specter: Analyzing a Bug in Multi-Window SwiftUI Applications

In this article, we will delve into a bug affecting SwiftUI applications in multi-window mode and propose effective temporary solutions. We will not only detail the manifestation of this issue but also share the entire process from discovery to diagnosis, and finally, resolution. Through this exploration, our aim is to offer guidance to developers facing similar challenges, helping them better navigate the complexities of SwiftUI development.

Recent Selections

SwiftLog and OSLog: Choices, Usage, and Pitfalls

Logging tools are crucial for developers, serving an essential role in providing instant and accurate information during debugging and maintaining live applications. This article, written by Wang Wei, delves deeply into two logging frameworks in Swift development: SwiftLog and OSLog. SwiftLog is suitable for cross-platform applications or scenarios requiring highly customized log management; OSLog, on the other hand, is optimized for application development on Apple platforms. The article not only details log writing, reading, and performance handling but also highlights potential issues and pitfalls when using OSLog, providing developers with comprehensive guidance and practical advice.

Additionally, last week, Keith Harrison also explored how to retrieve OSLog messages in detail in his article Fetching OSLog Messages in Swift.

Splitting Up a Monolith: From 1 to 25 Swift Packages

Modular programming is a key feature in modern software development. While many developers recognize the importance of modularity, they often hesitate to overhaul existing projects due to the complexity or difficulty of starting such transformations. In this article, Ryan Ashcraft thoroughly details how he restructured a monolithic architecture into more than twenty-five Swift packages, including the challenges and compromises encountered during the process. Ryan is very satisfied with the outcome of the restructuring; although the size of the app’s package increased, there was a significant improvement in build performance and SwiftUI previews. He hopes this article will provide insights and assistance to other developers considering similar restructurings.

Get Xcode Previews Working

The preview feature is a core aspect of SwiftUI, ideally boosting developers’ efficiency significantly. However, due to its unique setup, many projects encounter frequent issues with this feature, leading to criticism from developers. In this article, Alexander discusses how to address common issues with Xcode previews and establish an effective preview environment. By sharing his personal experiences with the IronIQ project, he details the steps and strategies for configuring Xcode previews to support Swift Package Manager (SPM) and complex data stacks.

For a deeper understanding of the Preview feature’s details and technical background, consider reading Behind SwiftUI Previews and Building Stable Preview Views: How SwiftUI Previews Work.

Syncing data with CloudKit in your iOS app using CKSyncEngine and Swift

While using the CloudKit API to fetch data from servers is relatively straightforward, real-time synchronization of local and cloud data presents numerous challenges, such as complex network environments, user permission constraints, and device power management policies. To simplify the developer’s workload, Apple introduced the CKSyncEngine framework at WWDC 2023, already implemented in applications such as Freeform and NSUbiquitousKeyValueStore. In this article, Jordan Morgan thoroughly explains the steps and techniques to simplify data synchronization using CKSyncEngine, making it easier for developers to implement complex application syncs. This article is a valuable resource for iOS developers looking to enhance user experience and achieve seamless data synchronization.

Embracing Imaginary Spatial User Experience in visionOS

With the launch of Apple Vision Pro, spatial computing devices have opened new dimensions for user interaction and experience. In this article, Francesco Perchiazzi provides a series of design guidelines and frameworks aimed at helping designers and developers create more engaging and interactive applications for VisionOS. The article explores the basic principles of user experience, how to utilize psychological principles in spatial computing, and the historical application of space design in creative problem-solving. Additionally, Perchiazzi offers specific guidelines for designing spatial objects, emphasizing the importance of integrating psychological principles and user research to not only meet functional needs but also evoke emotional responses. Through these methods, developers can create truly captivating multisensory experiences, offering unprecedented ways of interaction for users.

HandVector

Although the Apple Vision Pro has been on the US market for a while, the number of applications developed for it remains limited. This is largely due to developers around the world being unable to test gesture operations in simulators without physical devices. To address this, Xander created HandVector, a library that offers an effective method for testing hand tracking on the visionOS simulator. This library includes a macOS helper application and a Swift class that connects via the Bonjour service and converts JSON data into gesture data. By using HandVector, developers can thoroughly test their applications without having physical devices, thus accelerating app development and market launch.