Under its unassuming name, the iOS Notes App offers a plethora of powerful features, with document scanning being one I frequently use. Recently, in my spare time, I revisited and learned from a WWDC session on this feature, gaining valuable insights. Apple has long provided all the necessary tools for this. This article introduces how to implement a feature similar to the Notes app’s document scanning using system frameworks like VisionKit, Vision, NaturalLanguage, and CoreSpotlight.

Using VisionKit to Capture Recognizable Images

Introduction to VisionKit

VisionKit is a compact framework that enables your app to use the system’s document scanner. Present a full-screen camera view using VNDocumentCameraViewController. Receive callbacks from the document camera, such as completing a scan, by implementing VNDocumentCameraViewControllerDelegate in your view controller.

It offers developers the ability to capture and process images (perspective transformation, color processing, etc.) with the same document scanning appearance as in Notes.

How to Use VisionKit

VisionKit is straightforward and requires no configuration.

Request Camera Permission in the App

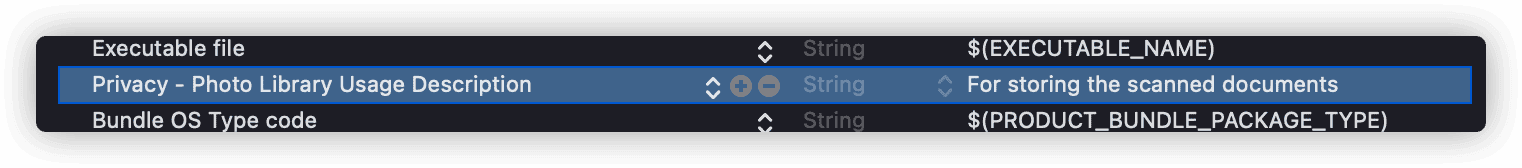

Add the NSCameraUsageDescription key in the info and state the reason for using the camera.

Creating a VNDocumentCameraViewController

VNDocumentCameraViewController doesn’t offer any configuration options; simply declare an instance to use it.

The following code demonstrates its use in SwiftUI:

import VisionKit

struct VNCameraView: UIViewControllerRepresentable {

@Binding var pages:[ScanPage]

@Environment(\.dismiss) var dismiss

typealias UIViewControllerType = VNDocumentCameraViewController

func makeUIViewController(context: Context) -> VNDocumentCameraViewController {

let controller = VNDocumentCameraViewController()

controller.delegate = context.coordinator

return controller

}

func updateUIViewController(_ uiViewController: VNDocumentCameraViewController, context: Context) {}

func makeCoordinator() -> VNCameraCoordinator {

VNCameraCoordinator(pages: $pages, dismiss: dismiss)

}

}

struct ScanPage: Identifiable {

let id = UUID()

let image: UIImage

}Implementing VNDocumentCameraViewControllerDelegate

VNDocumentCameraViewControllerDelegate provides three callback methods:

-

documentCameraViewController(_ controller: VNDocumentCameraViewController, didFinishWith scan: VNDocumentCameraScan)

Informs the delegate that the user has successfully saved a document scanned from the document camera.

-

documentCameraViewControllerDidCancel(_ controller: VNDocumentCameraViewController)

Informs the delegate that the user has canceled from the document scanner camera.

-

documentCameraViewController(_ controller: VNDocumentCameraViewController, didFailWithError error: Error)

Informs the delegate of a failure in document scanning while the camera view controller is active.

final class VNCameraCoordinator: NSObject, VNDocumentCameraViewControllerDelegate {

@Binding var pages:[ScanPage]

var dismiss:DismissAction

func documentCameraViewController(_ controller: VNDocumentCameraViewController, didFinishWith scan: VNDocumentCameraScan) {

for i in 0..<scan.pageCount{

let scanPage = ScanPage(image: scan.imageOfPage(at: i))

pages.append(scanPage)

}

dismiss()

}

func documentCameraViewControllerDidCancel(_ controller: VNDocumentCameraViewController) {

dismiss()

}

func documentCameraViewController(_ controller: VNDocumentCameraViewController, didFailWithError error: Error) {

dismiss()

}

init(pages:Binding<[ScanPage]>,dismiss:DismissAction) {

self._pages = pages

self.dismiss = dismiss

}

}VisionKit allows users to continuously scan images. The number of images can be queried through pageCount, and each image can be obtained using imageOfPage.

Users should adjust the orientation of the scanned images to the correct display state to facilitate the next step of text recognition.

Invoking in the View

struct ContentView: View {

@State var scanPages = [ScanPage]()

@State var scan = false

var body: some View {

VStack {

Button("Scan") {

scan.toggle()

}

List {

ForEach(scanPages, id: \.id) { page in

HStack {

Image(uiImage: page.image)

.resizable()

.aspectRatio(contentMode: .fit)

.frame(height: 100)

}

}

}

.fullScreenCover(isPresented: $scan) {

VNCameraView(pages: $scanPages)

.ignoresSafeArea()

}

}

}

}With this, you have acquired the same functionality for capturing and scanning images as in the Notes app.

Text Recognition with Vision

Introduction to Vision

In contrast to the compact VisionKit, Vision is a large, powerful framework with a wide range of uses. It employs computer vision algorithms to perform various tasks on input images and videos.

The Vision framework can perform face and facial landmark detection, text detection, barcode recognition, image registration, and object tracking. Vision also allows the use of custom Core ML models for tasks such as classification or object detection.

In this example, we will only use Vision’s text detection feature.

How to Use Vision for Text Recognition

Vision can detect and recognize multilingual text in images, with the recognition process occurring entirely on the device, ensuring user privacy. Vision offers two text detection paths (algorithms): Fast and Accurate. Fast is suitable for real-time reading scenarios like number recognition, but for our example, where we need to process the text of an entire document, the Accurate path using neural network algorithms is more appropriate.

The general workflow for any kind of recognition in Vision is similar:

-

Preparing the Input Image for Vision

Vision uses VNImageRequestHandler to handle image-based requests and assumes images are upright. Therefore, consider orientation when passing images. In this example, we will use images provided by VNDocumentCameraViewController.

-

Creating a Vision Request

First, create a VNImageRequestHandler object with the image to be processed.

Then create a VNImageBasedRequest to submit the recognition request. There are specific VNImageBasedRequest subclasses for each type of recognition. In this case, the request for recognizing text is VNRecognizeTextRequest.

Multiple requests can be made for the same image, simply create and bind all requests to an instance of VNImageRequestHandler.

-

Interpreting the Detection Results

There are two ways to access detection results: 1) check the results property after calling perform, or 2) set a callback method when creating the request object to retrieve recognition information. Callback results may contain multiple observations, and it’s necessary to loop through the array to process each observation.

A rough code example is as follows:

import Vision

func processImage(image: UIImage) -> String {

guard let cgImage = image.cgImage else {

fatalError()

}

var result = ""

let request = VNRecognizeTextRequest { request, _ in

guard let observations = request.results as? [VNRecognizedTextObservation] else { return }

let recognizedStrings = observations.compactMap { observation in

observation.topCandidates(1).first?.string

}

result = recognizedStrings.joined(separator: " ")

}

request.recognitionLevel = .accurate // Use the accurate path

request.recognitionLanguages = ["zh-Hans", "en-US"] // Set the recognition languages

let requestHandler = VNImageRequestHandler(cgImage: cgImage)

do {

try requestHandler.perform([request])

} catch {

print("error:\(error)")

}

return result

}Each recognized text segment may contain multiple results, and topCandidates(n) sets how many candidates are returned.

recognitionLanguages defines the order of languages used during language processing and text recognition. When recognizing Chinese, Chinese should be set first.

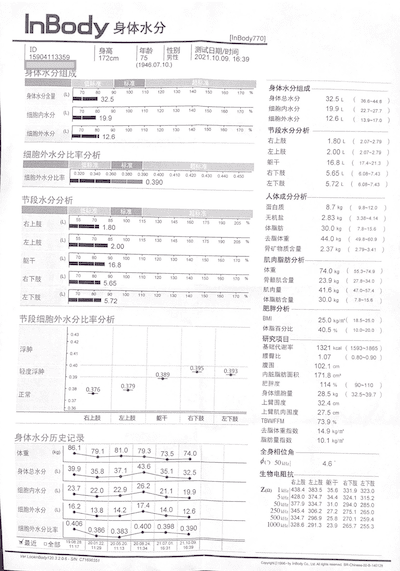

Document to be recognized:

Such documents are not suitable for natural language processing (unless extensive deep learning is involved), but they are the type of content primarily saved in health notes.

Recognition results:

InBody 身体水分 TinBody770) ID 15904113359 身高 年𠳕 性别 75 男性 测式日期/时间 ((透析)) 172cm

(1946.07.10) 2021,10.09. 16:39 身体水分组成 身体水分组成 身体水分含量((L) 60 0O 100 110 120 130

100 170 32.5 身体总水分 32 5t 30 0AA . 细胞内水分 () 70 10 GO 100 10 % 網舶内水分 19 9L 22 7-277

19.9 细胞外水分 12.6L (13 号 170 細胞外水分 (L) HOF 00 100 110 120 13o 140 160 170 % 节段水分分析

12.6 右上肢 1.80 L ( 201-279 细胞外水分比率分析 左上肢 2.00 L 2 07-2 79 低每准 魃干 16 8t 17 4 213

细胞外水分比事 0.320 0.340 0360 0 380 0.300 0.400 0410 0 420 0.430 0 440 0 450 右下胶 5.65L ( 6

08-743 0.390 左下肢 5.72 L ( 6 08-743 节段水分分析 人体成分分析 蛋白质 8.7 kg ( 9B~120 标准 无机盐

2.83 hg 3.38~4 14 右上肢 (L) 70 85 100 15 130 45 160 175 1G0 205 1.80 体脂肪 30.0 xg ( 7.8-156

左上肢 (L) 55 70 85 100 115 130 145 175 去脂体重 44.0 Mg ( 49 8~00 9 2.00 骨矿物质含量 2.37 kg (

279~3.41 躯干 (L) 70 80 90 100 110 120 130 40 150 160 170 肌肉脂肪分析 16.8 体重 74.0 xg 55 3-

74.9 右下肢 (L) 80 90 100 110 120 130 40 150 160 170 % 5.65 骨骼肌含量 23.9 kg 27 8-34 0 肌肉量

41.6 kg 47.0-57 4 左下肢 (L) 70 80 90 100 110 120 130 140 150 160 170 % 5.72 体脂肪含量 30.0

kg ( 7.8~156 肥胖分析 节段细胞外水分比率分析 BMI 25.0 kg/m ( 18.5~25 .0 体脂百分比 40.5% (10.0~200

0 43 0.42 研究项目- 浮肿 基础代谢宰 1321 kcal ( 1593~1865 腰臀比 1.07 0.80~0.90 0.395 腹围 102.1

cm 轻度浮肿 0 39 0.389 0.393 内脏脂肪面积 171.8 cm3 肥胖度 90~110 0 38 0.379 114 % 正常 0.376 身体

细胞量 28.5 kg ( 32.5~39.7 0 37 上臂围度 32.4 cm 0 36 上臂肌肉围度 27.5 cm 右上肢 左上肢 躯干 右下肢

左下肢 TBW/FFM 73.9% 去脂体重指数 身体水分历史记录 14.9 kg/m' 脂肪量指数 10.1 kg/m' 体重 (kg) 86.1

79.1 81.0 79.3 73.5 74.0 全身相位角 ¢( 50xz] 4.6 身体总水分 39.9 35.8 37.1 43.6 35. 32.5 生物电阻

抗- 细胞内水分 (L) 23.7 22.0 22.9 26.2 右上肢 左上肢躯千 右下肢 左下胶 21.1 19.9 ZQ) 1 MHlz/438.4

383.5 35.6 331.9 323.0 5 g.428.0 374.7 34.4 324.1 315.2 细胞外水分(L) 16.2 13.8 14.2 17.4

14.0 50 k1/ 377.9 334.7 31.0 294.0 285.0 12.6 250 H12/345.4 306.2 27.2 275.1 265.0 500 MHz

334.7 296.9 25.8 270.1 259.4 细胞外水分比率 0.406 0.386 0.383 0.400 0.398 0.390 1000 &H2/

328.6. 291.3 23.9 265.7 255.3 :最近 口全部 1903 28 20 01.22: 20.05 20 20 08 24 21 07 01:21

10.09 129 11 13 11.34 16.31 :1639 Ver Lookin Body120 32a6- SN. C71600359 Copyrgh(g 1296-by

InBody Co. Lat Au Pghs resaned BR-Chinese-00-B-140129The quality of the recognition results is closely related to the quality of the document print, the angle of the shot, and the quality of the lighting.

Extracting Keywords from Text with NaturalLanguage

Health Notes is an app centered around data logging. Adding a document scanning feature is to meet the user’s need for centralized archiving and organizing paper-based examination results. Therefore, it is sufficient to extract a reasonable amount of search keywords from the recognized text.

Introduction to NaturalLanguage

NaturalLanguage is a framework for analyzing natural language text and inferring its specific linguistic metadata. It provides various natural language processing (NLP) capabilities and supports many different languages and scripts. This framework segments natural language text into paragraphs, sentences, or words and tags these fragments with information such as parts of speech, lexical categories, phrases, scripts, and languages.

This framework can perform tasks such as:

-

Language Identification

Automatically detect the language of a text segment.

-

Tokenization

Break a text segment into linguistic units or tokens.

-

Parts-of-Speech Tagging

Tag individual words with their parts of speech.

-

Lemmatization

Derive stems from words based on morphological analysis.

-

Named Entity Recognition

Identify markers as personal names, place names, or organization names.

Approach to Extracting Keywords

In this example, the layout of physical examination reports is not very friendly to text recognition (users will submit reports in various styles, making it difficult to perform targeted deep learning), making it hard to perform parts-of-speech tagging or entity recognition on the recognition results. Therefore, I only performed the following steps:

-

Preprocessing

Remove symbols that affect Tokenization. In this case, since the text is obtained from VNRecognizeTextRequest, there are no control characters that could cause tokenization to crash.

-

Tokenization (while removing irrelevant information)

Create an NLTokenizer instance for tokenization. The approximate code is as follows:

let tokenizer = NLTokenizer(unit: .word) // The granularity level of the tokenizer

tokenizer.setLanguage(.simplifiedChinese) // Set the language of the text to be tokenized

tokenizer.string = text

var tokenResult = [String]()

tokenizer.enumerateTokens(in: text.startIndex..<text.endIndex) { tokenRange, attribute in

let str = String(text[tokenRange])

if attribute != .numeric, stopWords[str] == nil, str.count > 1 {

tokenResult.append(str)

}

return true

}-

Deduplication

Remove duplicate content.

After the above operations, the content obtained from the image mentioned earlier (optimized for Spotlight) is as follows:

inbody 身体水分身高性别男性日期时间透析组成含量细胞hof 分析上肢比率右下下肢人体成分蛋白质标准无机盐脂肪

体重矿物质躯干肌肉骨骼bmi 百分比研究项目浮肿基础代谢腹围轻度内脏面积肥胖度正常上臂围度tbw ffm 指数历史

记录全身相位生物电阻左下mhlz 最近全部ver lookin copyrgh lat pghs resaned chinese I have no knowledge or experience in NLP, and the above process is based solely on my intuition. If there are errors, corrections are welcome. Better results could be achieved by optimizing text recognition line height, enriching stopWords and customWords, and combining parts-of-speech judgment. The quality of the scanned image has the biggest impact on the final results.

Implementing Full-Text Search with CoreSpotlight

In addition to saving text in Core Data for retrieval, we can also add it to the system index for users to search using Spotlight.

For information on how to add data to Spotlight and how to call Spotlight for search in an app, please refer to my other article Showcasing Core Data in Applications with Spotlight.

Summary

A seemingly complex feature can be implemented with style by developers, even without related knowledge and experience, simply by using the APIs provided by the system. Official APIs can meet general scenario needs and deserve praise for Apple’s efforts.

Some friends were concerned about the impact of the above features on the program’s size after reading this article. My test program used 330 KB after employing VisionKit, Vision, NaturalLanguage, and SwiftUI frameworks, so the impact on size is negligible. This is another significant advantage of using system APIs.

If this article helped you, feel free to buy me a coffee ☕️ . For sponsorship inquiries, please check out the details here.