During Let’s Vision 2025, I met Megabits, the developer of SLIT_STUDIO, and we had a great conversation. As a developer with a background in industrial design, Megabits always incorporates unique design elements into his development work. Later, I had the opportunity to review SLIT_STUDIO’s source code and found that he did a great job adapting to Swift 6. Therefore, I invited him to write this article.

Although Swift 6 has been released for some time, many of Apple’s first-party frameworks have yet to fully adopt it, creating challenges for developers who rely on them during migration. While developing the SLIT_STUDIO camera app, Megabits encountered similar issues—but chose to tackle them head-on.

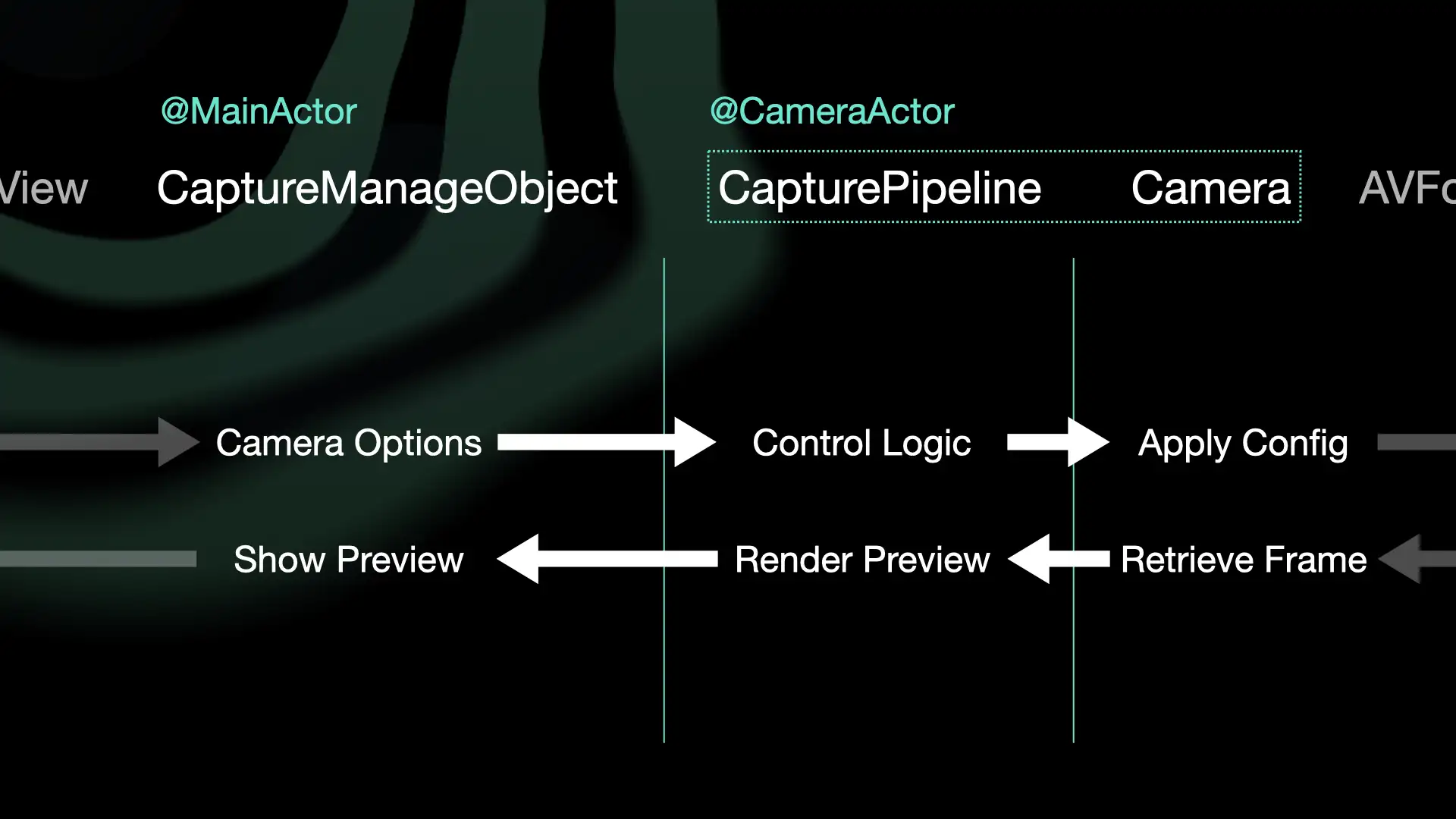

This article details how he addressed Swift 6’s new thread-safety requirements by introducing actor, GlobalActor, and well-structured components such as Recorder and CaptureManageObject. These changes helped resolve incompatibilities between AVFoundation and Swift Concurrency, improved code structure and safety, and avoided reliance on temporary workarounds like @preconcurrency and nonisolated.

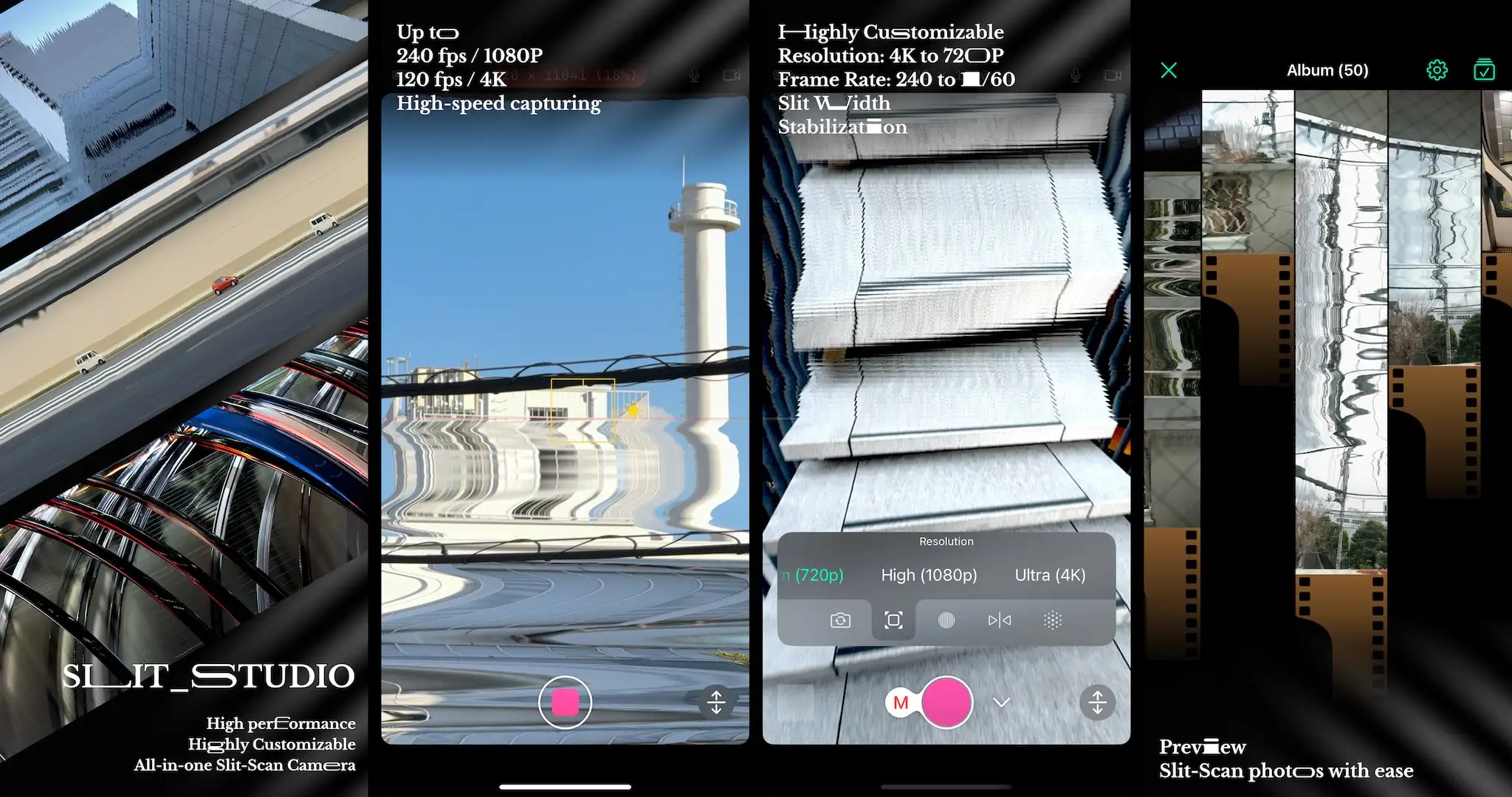

SLIT_STUDIO

Hey everyone, Megabits here! I recently wrapped up SLIT_STUDIO, a camera app that served as my graduation project for my design major. This whole development journey took an entire year and represents the culmination of my iOS development skills. It’s an app that uses the Slit-Scan technique to create some pretty cool visual effects.

The most complex parts of the app were undoubtedly the image rendering and camera logic. Within the realm of camera logic, the most difficult part was the refactoring for Swift 6.

Swift 6 was released just before I started developing the app, but I was mainly focused on getting the features working, so I didn’t upgrade right away. Plus, the early Xcode betas with Swift 6 were not very good. The errors were inconsistent between versions, so I waited until things stabilized a bit before diving in.

One of the biggest challenges with a camera app is that AVFoundation relies on GCD, which doesn’t play nicely with Swift Concurrency. For instance, when creating a DataOutput, you need to specify the queue where the callback should occur.

let output = AVCaptureVideoDataOutput()

output.setSampleBufferDelegate(self, queue: captureQueue)This means we need to find ways that are not quite obvious to bridge the gap between GCD with Swift concurrency, all while ensuring everything stays safe.

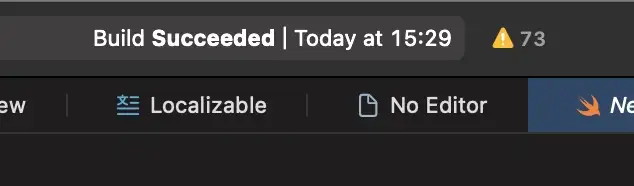

Before the Refactor

Before I started the refactoring process, the number of Swift 6 errors detected in my app wasn’t as terrifying as I might have thought. Even with full checks enabled, there were only about 70 or 80 errors. (Okay, it still seemed scary at the time, but after dealing with Swift 6 for a while, that number doesn’t seem so bad.) This wasn’t because my code was any good, but more because the bigger, more insidious problems were still lurking beneath the surface. As you make changes, you might see the error count increase temporarily.

At this point, all my camera logic was crammed into a massive CapturePipeline.swift file and its extensions. The code was seriously bloated.

class CapturePipeline: NSObject, ObservableObject {

... Approximately 300 lines and five extensions omitted here

}The CapturePipeline was handling the following responsibilities, along with their corresponding technologies:

• Updating the preview - ObservableObject • Camera capture - AVCaptureSession • Filter processing - Metal • Video recording - AVAssetWriter

All of this was happening inside a single class that was also an ObservableObject. Seriously? None of the bottom three are executed sequentially on the MainActor, yet they were all mixed up together. The fact that everything was working at all was pure luck. And don’t even get me started on the Sendable errors. Our main goal was to move code that should be running on different threads into their respective Actors. This way, the compiler can help us catch potential thread-safety issues and improve the overall code structure.

Starting the Refactor

When fixing Swift 6-related errors, don’t just focus on the errors themselves. Every error indicates a potential thread-safety vulnerability in your app. We should be focusing on reorganizing the app’s structure, separating logic that can run in parallel on different threads, and isolating these parts. Whenever possible, convert classes to actors to prevent concurrent access from multiple objects. This will naturally resolve most of the errors and prevent you from getting stuck in a cycle of fixing one warning and creating three more.

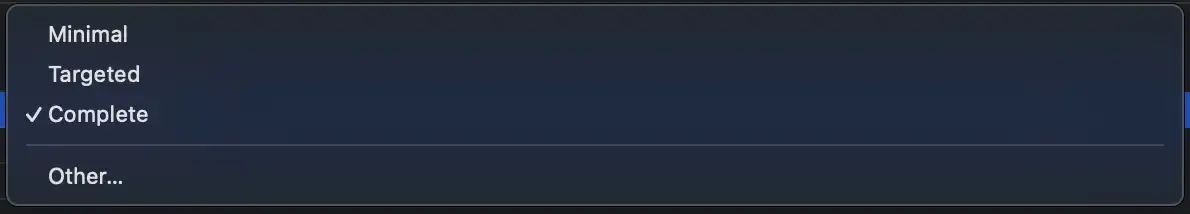

By default, Xcode has thread-safety checks set to the Minimal level, which produces fewer warnings and makes them easier to address. You might be tempted to fix these simple issues first. However, I recommend periodically switching to the Complete level to get a broader view of potential problems. Sometimes, fixing an issue at a lower level can mask a more serious problem that only surfaces at a higher level.

Separating the @MainActor Interactions

I started by looking at things from the View’s perspective. After all, the View is always on the @MainActor. I created a CaptureManageObject to connect the View and the CapturePipeline, and I slapped every possible attribute to indicate its safety. This allowed me to separate what parts of the CapturePipeline needed to interact with the MainActor and which ones didn’t.

@MainActor

final class CaptureManageObject: NSObject, ObservableObject, Sendable {

let pipeline: CapturePipeline

init(pipeline: CapturePipeline) {

super.init()

Task {

pipeline = try await CapturePipeline(delegate: self, isPad: isPad)

}

}

@Published var scanConfig = ScanConfig() {

didSet(oldValue) {

if oldValue == scanConfig { return }

scanConfigWhileRecording = scanConfig

Task {

await pipeline?.localScanConfig = scanConfig

}

}

}

...

func startCapture(preview: Bool) async {

await pipeline?.startCapture(preview: preview || streamingMode, enableRecording: enableRecording, enableMicrophone: enableMicrophone)

}

func stopCapture() async {

await pipeline?.stopCapture()

}

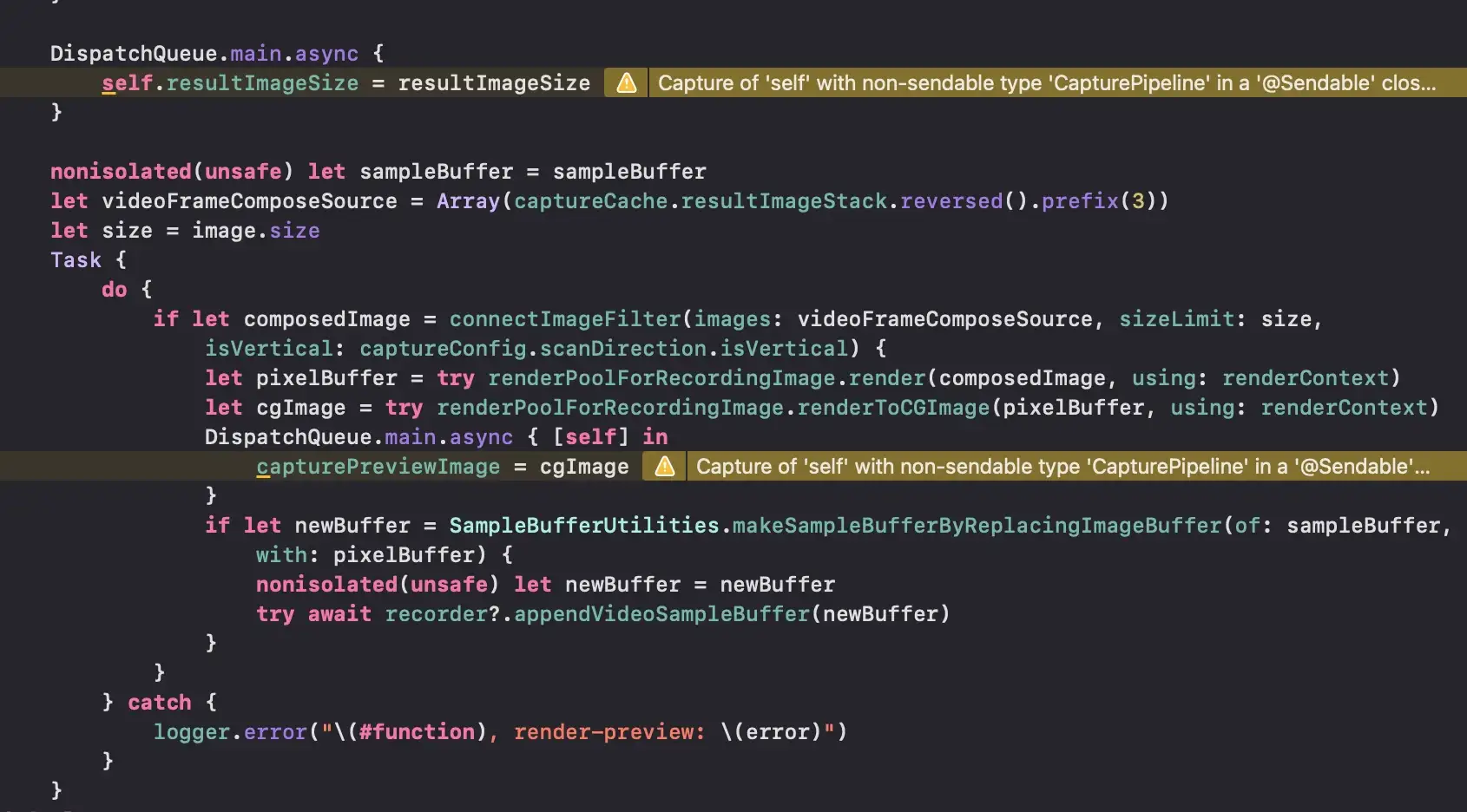

}Before this refactor, errors like the one below were all over the place. resultImageSize and capturePreviewImage were being accessed by the View. Even though I was using DispatchQueue.main.async to force writes to happen on the main thread, these variables were defined directly in the CapturePipeline, so the compiler couldn’t know if other code outside the main thread was accessing them. This resulted in the “self is not Sendable” error. You could add @MainActor to everything, but it’s cleaner to have a separate class.

I also extracted the video recording logic into its own actor because it could operate independently, only needing the buffer from each frame.

actor Recorder {

public init(url: URL, videoOrientation: Int32 = 0, vFormatDescription: CMFormatDescription, videoSettings: [String : Any], audioSettings: [String : Any]?) throws {

...

}

func appendVideoSampleBuffer(_ sampleBuffer: CMSampleBuffer) async throws {

...

}

...

}Now, the CapturePipeline was left with:

• Camera capture - AVCaptureSession • Filter processing - Metal

Separating Camera Interactions

After dealing with the View interactions, it was time to tackle the other end: the camera interactions. This was a bit trickier because, as I mentioned before, camera-related operations are stuck in the GCD era. Even if you wrap it in an actor, you still have delegates managed by GCD. So you’d have to mark those delegates as nonisolated, which defeats the purpose. Plus, for every frame captured by the camera, the filter processes it. There’s no parallel processing and no multiple camera instances. Putting them in the same actor seemed to save a lot of trouble. That’s why I used the less-common GlobalActor.

@globalActor actor CameraActor: GlobalActor {

static let shared = CameraActor()

}

@CameraActor class Camera: NSObject {

let captureQueue = DispatchQueue(label: "com.linearCCD.capture")

var captureSession = AVCaptureSession()

var currentDevice: AVCaptureDevice?

var videoInput: AVCaptureDeviceInput?

var audioInput: AVCaptureDeviceInput?

var videoOutput: AVCaptureVideoDataOutput?

var audioOutput: AVCaptureAudioDataOutput?

...

}

@CameraActor class CapturePipeline: NSObject, ObservableObject {

let renderContext: MTIContext

var renderPoolForFilter = RenderPool()

var renderPoolForRecordingImage = RenderPool()

var cameraFeedRenderTask: Task<(), Never>?

...

}This approach separated the logic while still keeping both classes running sequentially on the same thread, saving a lot of headaches. Long term, once Apple updates AVFoundation or the code structure allows for further refactoring, separating these parts would still be a good idea. Plus, it’ll be easier to add new features that need to run on separate threads. I tend to see GlobalActor as a temporary solution.

In the end, the camera logic looked something like this:

Ignoring Errors: Proceed with Caution

Swift 6 gives us plenty of ways to ignore errors, such as @preconcurrency and nonisolated(unsafe). Swift 6 might even suggest you use @preconcurrency. But before you do, ask yourself: Can these problems be solved using more conventional methods?

For example, consider these two code snippets. Both compile, but the first one is clearly safer and more straightforward. The second one is less clear on how it operates.

extension Camera: AVCaptureVideoDataOutputSampleBufferDelegate {

nonisolated func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

await ...

}

}extension Camera: @preconcurrency AVCaptureVideoDataOutputSampleBufferDelegate {

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

...

}

}When possible, we should aim for the safer solution and avoid ignoring errors unless it’s a temporary measure.

Thoughts on Swift 6

Even though concepts like Optionals were initially confusing, they were relatively simple to grasp. Swift 6, on the other hand, requires a much broader understanding and isn’t as easy to fix on the fly. We might never get as comfortable with Swift 6 as we are with Optionals, but it’s the future. So, we need to start working on it sooner rather than later.

Finally, if you’d like to try out SLIT_STUDIO, you can download it from the App Store. I’d also love to see any interesting creations you make with it!