Core Data is an object graph management framework with persistence functionality provided by Apple for its ecosystem. It has the characteristics of stability (widely used in various Apple system software), maturity (Core Data was released in 2009, and its history can be traced back to the 1990s), and out-of-the-box availability (built into the entire Apple ecosystem).

The advantages of Core Data are mainly reflected in object graph management, data description, caching, lazy loading, memory management, etc., but its performance in persistent data operations is generally average. In fact, for a considerable period of time, Core Data’s competitors always liked to use various charts to show their overwhelming advantage over Core Data in data operation performance.

Apple has gradually provided batch update, batch delete, and batch add APIs for several years, which to a certain extent improves the performance disadvantage of Core Data when dealing with large amounts of data.

This article will introduce the batch operations of Core Data, including principles, usage methods, advanced techniques, precautions, and other content.

Usage of Batch Operations

The official documentation does not explain the usage of batch operations in detail. Apple provides developers with a continuously updated demo project to demonstrate its workflow. This section will introduce batch deletion, batch update, and batch addition one by one in order from easy to difficult.

Batch Deletion

Batch deletion may be the most convenient and widely used function among all batch operations in Core Data.

func delItemBatch() async throws -> Int {

// create private context

let context = container.newBackgroundContext()

// execute on private context thread (to avoid affecting the view thread)

return try await context.perform {

// create NSFetchRequest that indicates the entity corresponding to batch deletion

let request = NSFetchRequest<NSFetchRequestResult>(entityName: "Item")

// set predicate, all Item data whose timestamp is earlier than three days ago. Not setting the predicate means all Item data will be deleted.

request.predicate = NSPredicate(format: "%K < %@", #keyPath(Item.timestamp),Date.now.addingTimeInterval(-259200) as CVarArg)

// create batch delete request (NSBatchDeleteRequest)

let batchDeleteRequest = NSBatchDeleteRequest(fetchRequest: request)

// set return result type

batchDeleteRequest.resultType = .resultTypeCount

// perform batch delete operation

let result = try context.execute(batchDeleteRequest) as! NSBatchDeleteResult

// return the number of records deleted in batch

return result.result as! Int

}

}

The above code will delete all Item entity data with the timestamp property earlier than three days ago from the persistent data (database). The comments in the code should be able to explain the entire batch deletion process clearly.

Other things to note include:

- Batch operations are best performed within a private managed object context thread.

- If no predicate (NSPredicate) is specified, it means all item data will be deleted.

- All batch operation requests (delete, update, add, and NSPersistentHistoryChangeRequest used for persistent history tracking) are subclasses of NSPersistentStoreRequest.

- Batch requests are issued through the managed object context (

context.execute(batchDeleteRequest)) and forwarded directly to the persistent store by the persistent store coordinator. - The result type can be set using

resultTypeto determine the type of results returned by the batch operation: statusOnly, count, or objectIDs of all objects. If you want to merge the changes into the view context after the batch operation within the same code block, you need to set the result type toresultTypeObjectIDs. - If multiple persistent stores contain the same entity model, you can use

affectedStoresto specify that batch operations are performed on only one or a few persistent stores. The default is to operate on all persistent stores. This property acts the same way for all batch operations (delete, update, add). For more information on how to make different persistent stores have the same entity model, see the corresponding chapter in Synchronize Local Database to iCloud Private Database.

In addition to specifying the data to be deleted using NSFetchRequest, you can also use another constructor of NSBatchDeleteRequest to directly specify the NSManagedObjectID of the data to be deleted:

func batchDeleteItem(items:[Item]) async throws -> Bool {

let context = container.newBackgroundContext()

return try await context.perform {

// Create NSBatchDeleteRequest using [NSManagedObjectID]

let batchDeleteRequest = NSBatchDeleteRequest(objectIDs: items.map(\\.objectID))

batchDeleteRequest.resultType = .resultTypeStatusOnly

let result = try context.execute(batchDeleteRequest) as! NSBatchDeleteResult

return result.result as! Bool

}

}

This method is suitable for scenarios where the data or data ID has been loaded into memory. Note that all entities (Entity) corresponding to NSManagedObjectID must be consistent, such as Item in this example.

Batch deletion provides limited support for relationships in Core Data. See below for more details.

Batch Update

In addition to specifying the entity and predicate (which can be omitted), batch updates require the properties and values that need to be updated.

The following code updates all Item data with a timestamp later than three days ago, updating its timestamp to the current date:

func batchUpdateItem() async throws -> [NSManagedObjectID] {

let context = container.newBackgroundContext()

return try await context.perform {

// Create NSBatchUpdateRequest and set the corresponding entity

let batchUpdateRequest = NSBatchUpdateRequest(entity: Item.entity())

// Set the result return type, which returns all changed records' NSManagedObjectID in this example

batchUpdateRequest.resultType = .updatedObjectIDsResultType

let date = Date.now // Current date

// Set the predicate for all records where the timestamp is later than three days ago

batchUpdateRequest.predicate = NSPredicate(format: "%K > %@", #keyPath(Item.timestamp), date.addingTimeInterval(-259200) as CVarArg)

// Set the update dictionary [property: update value], which can set multiple properties

batchUpdateRequest.propertiesToUpdate = [#keyPath(Item.timestamp): date]

// Perform batch operation

let result = try context.execute(batchUpdateRequest) as! NSBatchUpdateResult

// Return the result

return result.result as! [NSManagedObjectID]

}

}

Note the following:

- In

propertiesToUpdate, misspelling the property name or setting the wrong update value type will cause the program to crash. - The batch process will ignore all value validation processes in Core Data, whether set in the data model editor or added in

validateForXXXXmethods. - Batch updates cannot modify the base value, so if you need to implement

item.count += 1, you still have to use traditional methods. - Relationship properties or sub-properties of relationship properties cannot be modified in batch updates.

- If the updated entity is an abstract entity, you can use

includesSubentitiesto set whether the update includes sub-entities. - In batch update operations, you cannot use key path concatenation to set predicates (batch delete supports key path concatenation). For example, the following predicate is illegal in batch updates (assuming Item has an attachment relationship and Attachment has a count property):

NSPredicate(format: "attachment.count > 10").

Batch Insert

The following code will create a given number of Item data (amount):

func batchInsertItem(amount: Int) async throws -> Bool {

// Create a private context

let context = container.newBackgroundContext()

return try await context.perform {

// Number of records already added

var index = 0

// Create an NSBatchInsertRequest and declare a data processing closure. If dictionaryHandler returns false, Core Data will continue to call the closure to create data until the closure returns true.

let batchRequest = NSBatchInsertRequest(entityName: "Item", dictionaryHandler: { dict in

if index < amount {

// Create data. The current Item has only one property, timestamp, of type Date.

let item = ["timestamp": Date().addingTimeInterval(TimeInterval(index))]

dict.setDictionary(item)

index += 1

return false // Not yet complete, need to continue adding

} else {

return true // index == amount, the specified number (amount) of data has been added, end batch insertion operation.

}

})

batchRequest.resultType = .statusOnly

let result = try context.execute(batchRequest) as! NSBatchInsertResult

return result.result as! Bool

}

}

NSBatchInsertRequest provides three construction methods for adding new data:

-

init(entityName: String, objects: [[String : Any]])This method requires all data to be saved in advance as a dictionary array, which takes up more memory space than the other two methods.

-

init(entityName: String, dictionaryHandler: (NSMutableDictionary) -> Bool)The method used in the example above. Like batch update, use dictionaries to build data.

-

init(entityName: String, managedObjectHandler: (NSManagedObject) -> Bool)Compared to method 2, because managed objects are used to build data, it avoids possible spelling errors in property names and type errors in property values. However, since a managed object needs to be instantiated each time, it is theoretically slightly slower than method 2. Compared to the traditional way of creating data using managed object instances, method 3 has a huge advantage in memory usage (takes up very little space).

Method three is used in the following code:

The following code will merge the newly added Item data into the view context.

let batchRequest = NSBatchInsertRequest(entityName: "Item", managedObjectHandler: { obj in

let item = obj as! Item

if index < amount {

// Avoid property name or value type errors when adding through dictionary by assigning properties

item.timestamp = Date().addingTimeInterval(TimeInterval(index))

index += 1

return false

} else {

return true

}

})

Other things to note:

- When creating data through dictionaries, if the value of an optional property is nil, it can be omitted from the dictionary.

- Batch addition cannot handle Core Data relationships.

- When multiple persistent stores contain the same entity model, by default, newly created data will be written to the persistent store with a position earlier in the persistentStores property of the persistent store coordinator. The persistent store to write to can be changed using affectedStores.

- By setting constraints in the data model editor, batch additions can be made capable of batch updates (selectively). This will be explained in detail below.

Merge Changes into View Context

Since batch operations are performed directly on the persistent store, the changes made to the data must be merged into the view context in some way in order to reflect the changes on the UI.

Two methods can be used:

-

Enabling persistent history tracking (currently the preferred method)

For details, see Using Persistent History Tracking in CoreData. This method not only allows changes made by batch operations to be reflected in the current application in a timely manner, but also allows other members of the App Group (who share the database file) to respond to changes in the data in a timely manner.

-

Integrating merge operations into batch operation code

func batchInsertItemAndMerge(amount: Int) async throws {

let context = container.newBackgroundContext()

try await context.perform {

var index = 0

let batchRequest = NSBatchInsertRequest(entityName: "Item", dictionaryHandler: { dict in

if index < amount {

let item = ["timestamp": Date().addingTimeInterval(TimeInterval(index))]

dict.setDictionary(item)

index += 1

return false

} else {

return true

}

})

// The return type must be set to [NSManagedObjectID].

batchRequest.resultType = .objectIDs

let result = try context.execute(batchRequest) as! NSBatchInsertResult

let objs = result.result as? [NSManagedObjectID] ?? []

// Create a change dictionary. Create different key-value pairs based on the type of data change. Insert: NSInsertedObjectIDsKey, Update: NSUpdatedObjectIDsKey, Delete: NSDeletedObjectIDsKey.

let changes: [AnyHashable: Any] = [NSInsertedObjectIDsKey: objs]

// Merge changes

NSManagedObjectContext.mergeChanges(fromRemoteContextSave: changes, into: [self.container.viewContext])

}

}

Fast and Efficient Batch Operations

Whether it is official data or actual testing by developers, Core Data’s batch operations have considerable advantages over traditional methods that achieve the same results (using managed objects in managed object contexts) - fast execution and low memory usage. So what are the reasons for these advantages? And what important features of Core Data does “batch operation” sacrifice to gain these advantages? This section will explore the above questions.

Collaboration among Components in Core Data

To understand why batch operations are fast and efficient, you need to have a certain understanding of the collaboration rules between the various components in Core Data and the mechanism for data transmission between them.

Using the modification of attribute values obtained from Core Data as an example, we can briefly understand the collaboration between the various components and the flow of data (the storage format is SQLite):

let request = NSFetchRequest<Item>(entityName: "Item")

request.predicate = NSPredicate(format: "%K > %@", #keyPath(Item.timestamp), date.addingTimeInterval(-259200) as CVarArg)

let items = try! context.fetch(request)

for item in items {

item.timestamp = Date()

}

try! context.save()

- The managed object context (NSManagedObjectContext) sends the “fetch request” to the persistent store coordinator (NSPersistentStoreCoordinator) by calling the

executeRequest()method of the request (NSFetchRequest). - The persistent store coordinator converts NSFetchRequest into the corresponding NSPersistentStoreRequest and calls its own

executeRequest(_:with:)method to send the “fetch request” and the “context” that initiated the request to all persistent stores (NSPersistentStore). - The persistent store converts the NSPersistentStoreRequest into an SQL statement and sends it to SQLite.

- SQLite executes this statement and returns all data that matches the query condition to the persistent store (including object IDs, attribute contents of each row of data, data versions, etc.), which the persistent store saves in the row cache.

- The persistent store instantiates the data obtained from step 4 as managed objects (in this case, instantiated as Item) and returns these objects to the persistent store coordinator. Since the default value of returnsObjectsAsFaults for NSFetchRequest is true, these objects are in fault form at this point.

- The persistent store coordinator returns the data instantiated in step 5 as an array of managed objects to the managed object context that initiated the request.

- If there are new data or data changes that match the conditions obtained in the context, the context will consider combining them with the data obtained from step 6.

- The items variable obtains all data that meets the final conditions (at this point the data is lazy).

- When updating the data using item.timestamp, Core Data checks whether the current managed object is in fault form (in this case, it is).

- The context initiates a fill request to the persistence store coordinator.

- The persistence store coordinator requests data associated with the current object from the persistence store.

- The persistence store looks up the data in its row cache and returns it (in this example, the data has already been loaded into the row cache. In other cases, if the data is not in the cache, the persistence store will obtain the corresponding data from SQLite using an SQL statement).

- The persistence store coordinator hands over the data obtained from the persistence store to the context.

- Fill in the lazy state of the item with the acquired data in the context, and replace the original timestamp with the new data.

- The context sends a NSManagedObjectContextWillSaveNotification notification (triggered by the save method), which includes the collection of objects to be updated.

- Verify all items that have changed (call the custom validation code in the validateForUpdate method of Item and the validation conditions defined in the model editor). If validation fails, an error is thrown.

- Call the willSave method of all managed objects (items) that need to be updated.

- Create a persistence storage request (NSSaveChangesRequest) and call the executeRequest(_:with:) method of the persistence storage coordinator.

- The persistence storage coordinator sends the request to the persistence storage.

- The persistence storage checks for conflicts between the data in the request and the data in the persistence storage row cache. If a conflict occurs (the data in the row cache has changed during our context data change process), it is handled according to the merging strategy.

- Translate the NSSaveChangesRequest into corresponding SQL statements and send them to the SQLite database (the SQL statements will vary depending on the merging strategy, and another conflict check will be performed during the SQLite save process).

- Execute the given SQL statement in SQLite (Core Data also has its unique way of handling data in SQLite, please refer to How Core Data saves data in SQLite for details.)

- After updating in SQLite, the persistent store updates its row cache, updating the data and data version to the current state.

- Call the

didSave()method of all updated item instances. - Erase the updated item and the dirty state of the managed object context.

- The managed object context sends an NSManagedObjectContextDidSaveNotification notification containing the collection of updated objects.

Perhaps the above steps have already made you a little headache, but in fact, we have omitted quite a lot of details.

These tedious operations may cause performance issues for Core Data in some cases, but Core Data’s strength is also demonstrated in these details. It not only allows developers to process data from multiple dimensions and times, but also seeks appropriate balance in performance, memory usage and other aspects based on the state of the data. For a mature Core Data developer, the overall benefits of Core Data still outweigh the advantages of directly manipulating the database or using other ORM frameworks.

Why is Batch Operation Fast

The functionality implemented using the traditional method above is identical to the batch update code described earlier. So what is the internal operation process of Core Data when using batch update code?

- The managed object context sends the persistent storage query request (NSBatchUpdateRequest) to the persistent storage coordinator through execute.

- The coordinator directly forwards the request to the persistent storage.

- The persistent storage converts it into SQL statements and sends them to SQLite.

- SQLite executes the update statement and sends the updated record ID back to the persistent storage.

- The persistent storage converts the ID into NSManagedObjectID and returns it to the context through the coordinator.

Seeing this, I don’t think I need to explain why batch operations are more efficient than traditional operations.

There is a trade-off: Core Data’s batch operations trade efficiency for giving up a lot of detail processing. Throughout the process, we lose the ability to verify, notify, callback mechanisms, and relationship handling.

Therefore, if your operation does not require the above skipped capabilities, batch operations are indeed a great choice.

Why is Batch Operation Economical

For update and delete operations, batch operations do not need to extract data into memory (context, row cache), so the entire operation process hardly occupies any memory.

As for batch operations to add new data, the dictionaryHandler closure (or managedObjectHandler closure) immediately converts each data built into the corresponding SQL statement and sends it to the persistent storage. During the entire creation process, only one copy of the data is kept in memory. Compared to the traditional method of instantiating all new added data in the context, memory usage can also be almost ignored.

Avoiding WAL file overflow

Because batch operations occupy very little memory, developers have almost no psychological burden when using batch operations, making it easy to execute excessive instructions in one operation. By default, Core Data enables WAL mode for SQLite. When the amount of SQL transactions is too large, the size of the WAL file will increase rapidly and reach the preset checkpoint of WAL, which can easily cause file overflow and operation failure.

Therefore, developers still need to control the data scale of each batch operation. If necessary, they can modify the default settings of Core Data’s SQLite database by setting the persistent storage metadata (NSSQLitePragmasOption).

Advanced Techniques in Batch Operations

In addition to the capabilities mentioned above, there are some other useful techniques in batch operations.

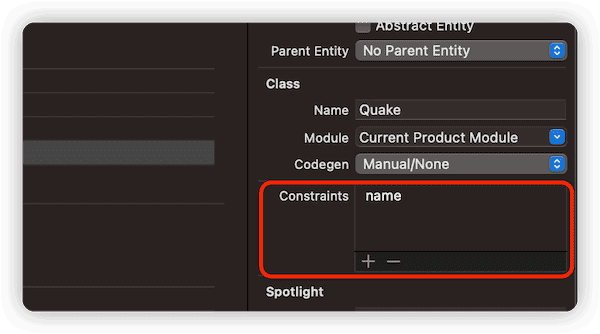

Using constraints to control batch addition behavior

In Core Data, by setting an attribute (or several attributes) of an entity as a constraint in the data model editor, the value of this attribute has uniqueness.

Because the unique constraint of Core Data relies on the features of SQLite, batch operations naturally have this ability.

Assuming an application needs to regularly download a huge JSON file from a server and save the data to a database. If a property in the source data can be determined to be unique (such as ID, city name, product number, etc.), it can be set as a constraint property in the data model editor. When using batch addition to save JSON data to the database, Core Data will perform operations based on the merge policy set by the developer (for detailed information on merge policies, please refer to the article Several Tips on Core Data Concurrency Programming at “Set the Correct Merge Strategy”). For example, taking the new data as the standard or taking the data in the database as the standard.

Core Data will create corresponding SQL statements for batch addition operations based on whether the constraint is enabled in the data model and what merge policy is defined. For example, in the following situations:

- Constraint not enabled

INSERT INTO ZQUAKE(Z_PK, Z_ENT, Z_OPT, ZCODE, ZMAGNITUDE, ZPLACE, ZTIME) VALUES(?, ?, ?, ?, ?, ?, ?)-

Enabled constraints and set merge policy to NSErrorMergePolicy.

In this state, new data (with consistent constraint attributes) will be ignored (unchanged).

INSERT OR IGNORE INTO ZQUAKEZ_PK, Z_ENT, Z_OPT, ZCODE, ZMAGNITUDE, ZPLACE, ZTIME) VALUES(?, ?, ?, ?, ?, ?, ?)-

Enabled constraints and set the merge policy to NSMergePolicy.mergeByPropertyObjectTrump.

In this case, the behavior changes to updating.

INSERT INTO ZQUAKE(Z_PK, Z_ENT, Z_OPT, ZCODE, ZMAGNITUDE, ZPLACE, ZTIME) VALUES(?, ?, ?, ?, ?, ?, ?) ON CONFLICT(ZCODE) DO UPDATE SET Z_OPT = Z_OPT+1 , ZPLACE = excluded.ZPLACE , ZMAGNITUDE = excluded.ZMAGNITUDE , ZTIME = excluded.ZTIMENotice: Creating constraints conflicts with the functionality of Core Data with CloudKit. To understand which attributes or features cannot be enabled under Core Data with CloudKit, please refer to Core Data with CloudKit: Synchronizing Local Database to iCloud Private Database.

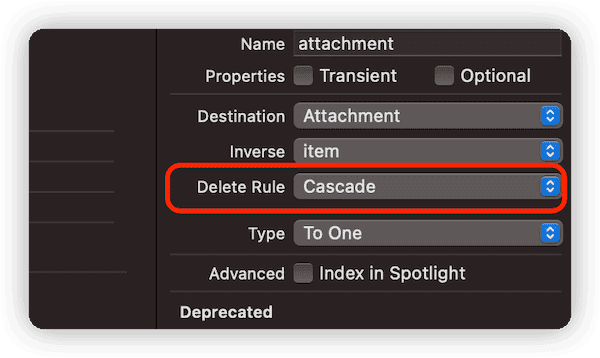

Limited Support for Batch Deletion of Core Data Relationships

In the following two cases, batch deletion can automatically perform the cleanup of relationship data:

- Relationships with Cascade deletion rules

For example, if Item has a relationship named attachment (one-to-one or one-to-many), and the deletion rule set by the Item side is Cascade. When performing batch deletion on Item, Core Data will automatically delete the Attachment data corresponding to Item.

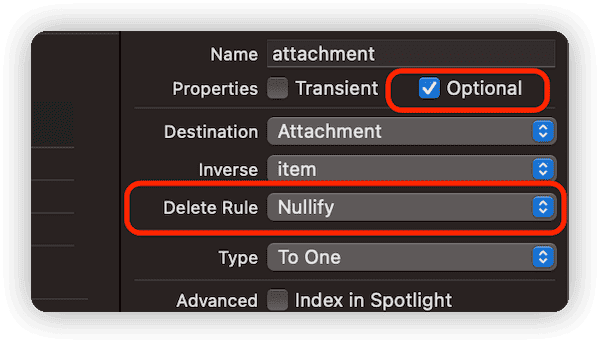

-

Deleting rule is Nullify, with optional relationship

For example, if an Item has a relationship named “attachment” (one-to-one or one-to-many), and the deleting rule set on the Item side is Nullify, with an optional relationship. When performing a bulk delete on the Item, Core Data will set the relationship ID of the corresponding Attachment (related to the Item) to NULL (without actually deleting these Attachment data).

Perhaps because batch deletion provides support for some Core Data relationships, it has become the most commonly used batch operation.

💡 Common Practical Quick Reference

Conclusion

Batch operations improve the efficiency and reduce the memory usage of Core Data data operations in certain situations. When used correctly, it will become a powerful tool for developers.